Project 1

Project Description

Sergei Mikhailovich Prokudin-Gorskii (1863-1944) was a pioneer photographer in the 20th century. He envisioned color photography in a monochrome world, and he took actions by recording three exposures of every scene onto a glass plate using a red, a green, and a blue filter. His photos depicted the last years of the Russian Empire. They were purchased in 1948 by the Library of Congress, digitized, colored, and made public online.

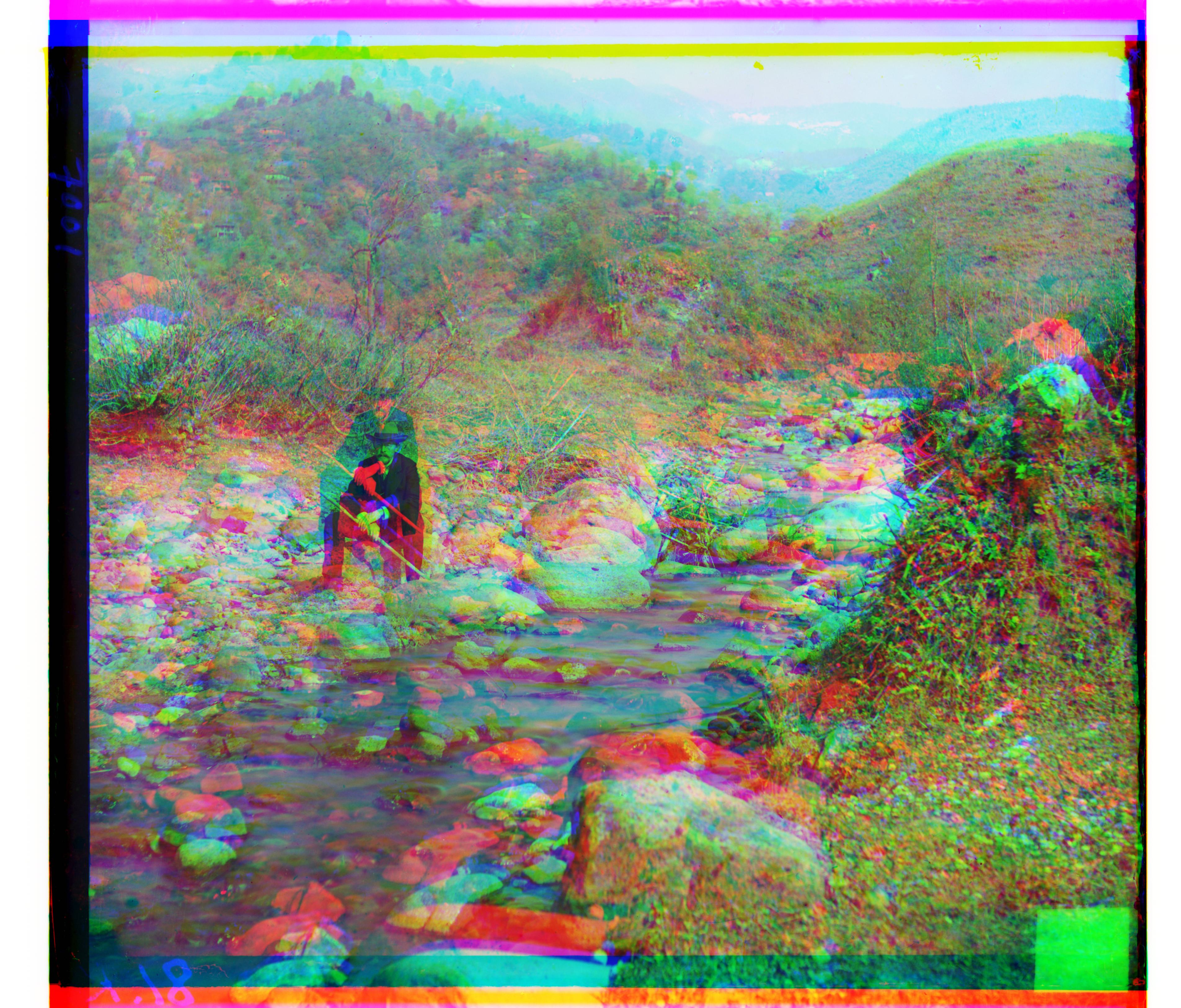

The goal in this project is to take the digitized Prokudin-Gorskii glass plate images (three monochrome ones) and, using image processing techniques, automatically produce a single color image with as few visual artifacts as possible.

The challenge I have to solve is image alignment, since it is rarely possible to ask an object to remain stationary as Prokudin-Gorskii took his three monochrome photos, which is also the key flaw in his strategy.

Small Size Image Alignment (exhaustive search)

For small size images (jpg format), in which the total number of pixels of a monochrome photo is usually around 120k, I used exhaustive search in looking for the best alignment. Using the blue filter channel as reference, I aligned red and green filter channels by circular translating a search window of [-15, 15] in both the x and y axes according to either sum of squared distance (SSD) or normalized cross correlation (NCC) similarity score metric. In addition, I implemented the option to crop 2% on all four sides in each of the monochrome photos before alignment, in order to remove the impacts of black borders.

From my experience, the two similarity score metrics do not have a difference in results, but the choice in cropping does. Below are example small size images, which include the best red and green channel displacements as well as whether they are cropped.

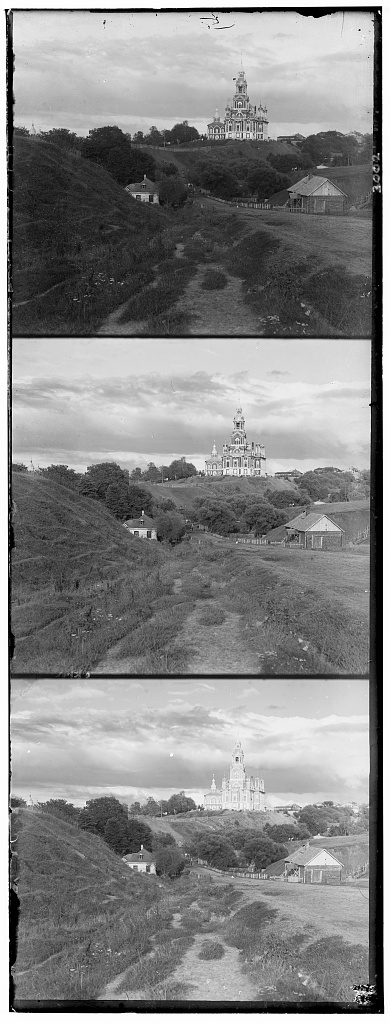

cathedral

original

green translation: [1, -1]

red translation: [7, -1]

no crop

green translation: [5, 2]

red translation: [11, 3]

crop

monastery

original

green translation: [-6, 0]

red translation: [9, 1]

no crop

green translation: [-3, 0]

red translation: [3, 2]

crop

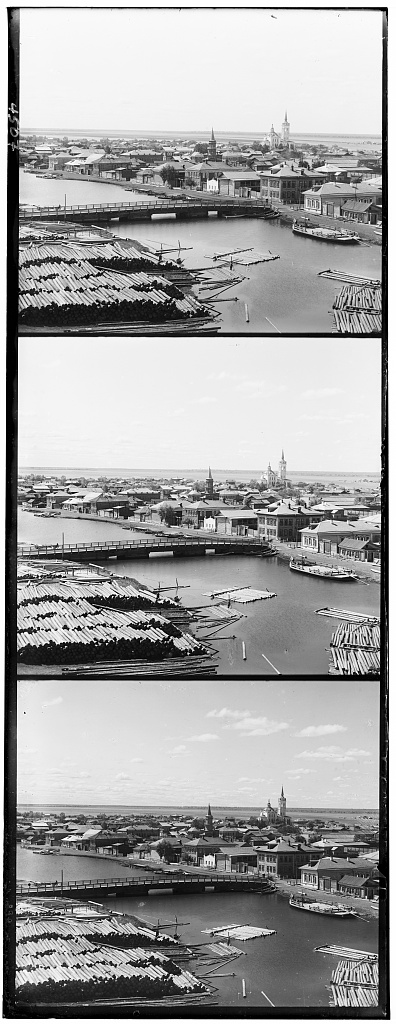

tobolsk

original

green translation: [3, 2]

red translation: [6, 3]

no crop

green translation: [3, 2]

red translation: [6, 3]

crop

As shown above, cropping performs better than no cropping in cathedral and monastery. The two methods perform equally well in tobolsk.

Large Size Image Alignment (pyramid search)

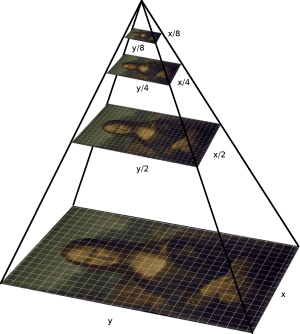

For large size images (tif format), in which the total number of pixels of a monochrome photo is usually around 10 million, exhaustive search becomes terribly expensive computationally. To solve this challenge, I implemented an image pyramid algorithm.

After dividing the original photo Prokudin-Gorskii took into three monochrome ones, my image pyramid algorithm first downscales each image to the coarsest level, where the total number of pixels is less than 90k. At the coarsest level, I used exhaustive search, the same method used in small size image alignment, in a window of [-15, 15] for alignment. Then I upscales the image by a factor of 2 until it reaches the original image size. At each step in upscaling, I first double the best displacement found in the previous coarser level, then I refine this displacement in a search window of [-5, 5].

Similar to small size image alignment, I incorporated SSD and NCC as two similarity score metrics, as well as the option to crop 2% on all four sides in each of the monochrome photos before alignment. According to results, cropping always produces at least as well as the no cropping ones. In addition, the two similarity score metrics do not have significant performance differences. The computation cost for each color image generation is roughly 20 seconds.

harvesters

green translation: [60, 15]

red translation: [126, 13]

icon

green translation: [40, 17]

red translation: [89, 23]

sculpture

green translation: [33, -11]

red translation: [139, -26]

three generations

green translation: [52, 5]

red translation: [112, 7]

train

green translation: [43, 0]

red translation: [93, 31]

church

green translation: [12, -8]

red translation: [25, -14]

lady

green translation: [56, -6]

red translation: [114, -16]

melons

green translation: [82, 4]

red translation: [155, -1]

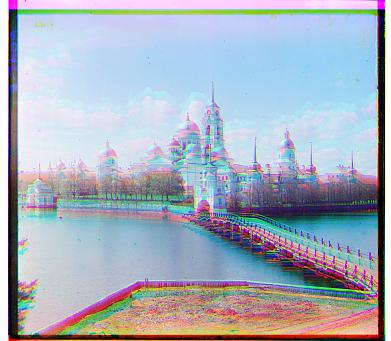

onion church

green translation: [52, 22]

red translation: [110, -1]

self portrait

green translation: [113, -2]

red translation: [64, -6]

emir

green translation: [50, 23]

red translation: [38, 31]

As shown above, the alignment performance is perfect in harvesters, icon, sculpture, three generations, and train. My pyramid algorithm works fine in church, lady, melons, and onion church, while it fails in self portrait and emir. If I change the cropping percentage from 2% to 5%, then church, lady, and onion church become perfect while harvesters and sculpture become fine.

In self portrait and emir, it seems that one of the three monochrome photos is shifting to the top from the other two. I think that maybe adding edges and gradients to my alignment features (red, green, blue) would solve the problem.

Other Image Results

adobe wall

green translation: [3, 0]

red translation: [3, 0]

cliff castle

green translation: [-1, 0]

red translation: [15, 0]

catholic monastery

green translation: [49, -3]

red translation: [101, -3]

floodgates